Overview

In 2023, the Cambridge Dictionary selected ‘hallucinate‘ as its Word of the Year, recognising its growing importance in the context of artificial intelligence (AI).

This choice reflects the updated meaning of the word, showcasing its emerging importance in the language of AI and highlighting the ever-changing nature of our communication in the tech-centric era.

What puts ‘hallucinate‘ in the spotlight as the 2023 Word of the Year?

The ongoing discourse around generative artificial intelligence is the driving force.

This year, introducing user-friendly tools like ChatGPT, Bard, DALL-E, and BingAI, all hinging on expansive language models, has ignited widespread interest.

In this dynamic landscape, familiar terms like “hallucinate” have acquired fresh AI-related connotations. The Cambridge Dictionary team’s selection of “hallucinate” for 2023 reflects the core of AI discussions.

Despite the potent capabilities of generative AI, effectively steering its strengths and current limitations is an ongoing learning curve. This journey demands a heightened awareness of its potential and current drawbacks.

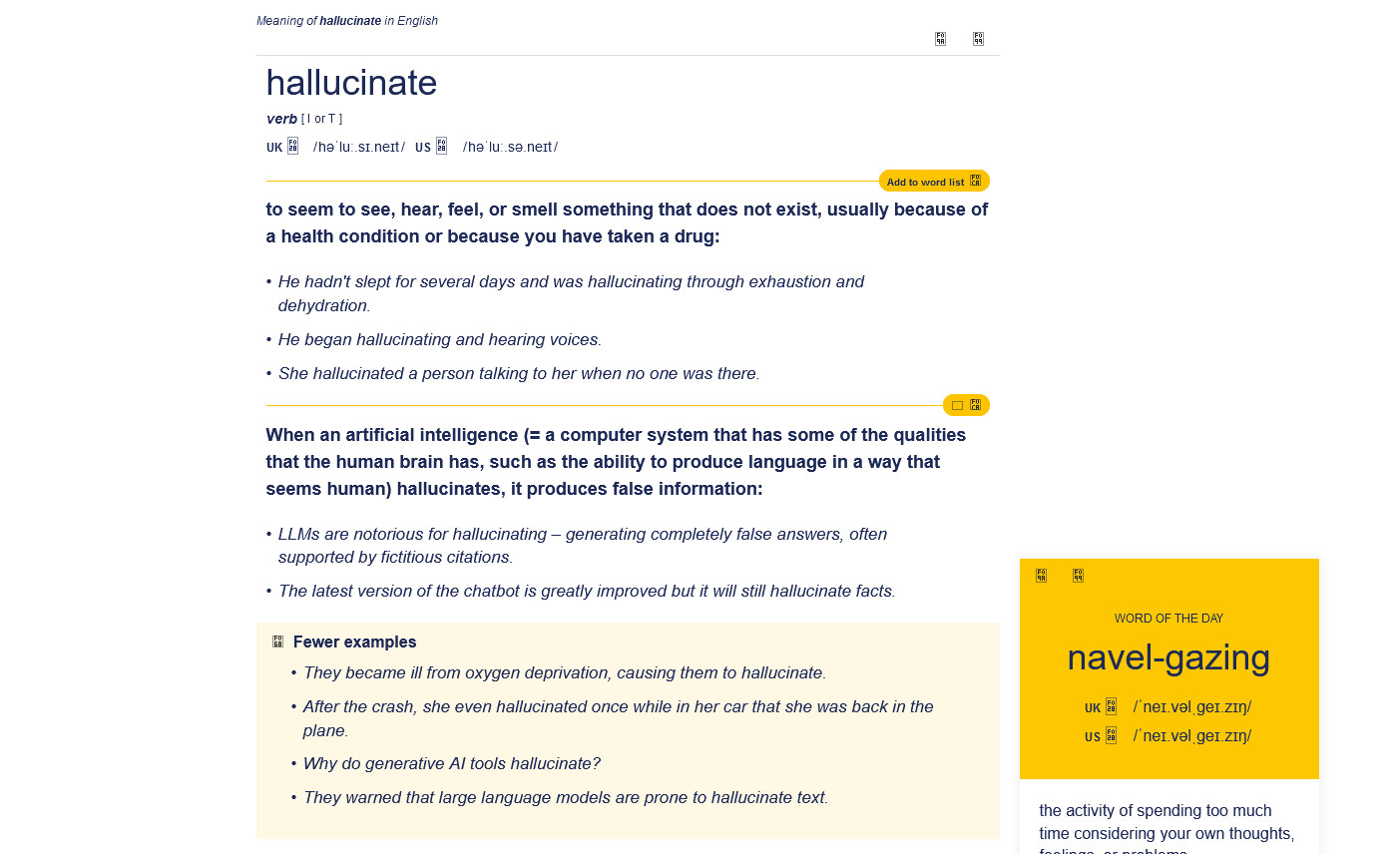

Hallucinating earlier held the definition of perceiving non-existent sensations due to health issues or drug consumption, as defined in the Cambridge Dictionary until 2023.

However, as generative AI tools like ChatGPT and Bard, utilising large language models (LLMs), gained prominence, ‘hallucinate’ evolved. This year, the Cambridge Dictionary updated its definition to include, “When an artificial intelligence hallucinates, it produces false information.”

Generative AI operates based on past data, responding to prompts with information learned from extensive training data. Tools like ChatGPT and Bard use LLMs, aiming to replicate human thought by learning from vast human-created sources. However, Wendalyn Nichols, Cambridge Dictionary’s publishing manager, emphasises the importance of critical thinking in utilising AI tools, noting that while AIs excel in processing vast amounts of data for specific information, their originality can lead to deviations from accuracy.

OpenAI stands out as the sole tech company taking substantial measures to curb inaccuracies in hallucinations, particularly with its GPT-4 model, contributing to its perceived leadership in the field. The specifics of OpenAI’s approach remain undisclosed. However, Chief Scientist Illya Sutskever suggests that Reinforcement Learning through Human Feedback (RLHF) could be a potential avenue for reducing hallucinations.

Cambridge Dictionary acknowledges 2023 as a remarkable year for AI-related terms, with additions like “large language model,” “AGI,” “generative AI,” and “GPT” reflecting the evolving landscape of artificial intelligence in language and communication.