The Indian government’s recent (on Tuesday, Dec 26 ) directive to social media companies to curb deepfake content is a significant step in addressing the challenges posed by advanced AI technologies. Deepfake technology, which allows for the creation of highly realistic and deceptive videos, has been a growing concern globally.

Ministry of Electronics and Information Technology (MeitY)

The Ministry of Electronics and Information Technology (MeitY) has asked platforms like Facebook, Instagram, and others to remove such content and comply with the Information Technology (IT) Rules. These rules mandate that social media platforms must prevent activities causing 11 listed harms, including threats to national security, child pornography, obscenity, disinformation, harassment based on gender, religion, race, etc., unauthorized personal information sharing, impersonation, commercial fraud, online game deception, software viruses, and any other unlawful activity.

Rajeev Chandrasekhar, the Minister of State for Electronics and Information Technology, stated that a formal advisory has been issued today. This advisory incorporates procedures agreed upon to ensure that users on these platforms do not violate the content prohibited under Rule 3(1)(b). If any legal violations are observed or reported, legal consequences will ensue.

The year 2023 has seen rapid advancements in AI, leading to a widespread deepfake menace on social media. Deepfakes of several Indian actresses and business tycoons have surfaced on the internet, highlighting the urgent need for regulation.

One notable incident involved a fake video of Brad Garlinghouse, the CEO of US-based crypto solutions provider Ripple. The video, which appeared real, showed the CEO promoting a fraudulent crypto scheme. Despite being alerted, Google failed to take down the video immediately.

PM Modi on DeepFake contents

Indian Prime Minister Narendra Modi, who has himself been a victim of AI deepfake, emphasized the need to regulate AI and exercise caution while using new technologies. He stated, “We have to be careful with new technology. If these are used carefully, they can be very useful. However, if these are misused, it can create huge problems. You must be aware of deepfake videos made with the help of generative AI.”

He further stressed that these videos can appear very authentic, so we must be extremely vigilant before accepting a video or an image as genuine. He also highlighted the need for a global framework for AI.

On another occasion, PM Modi identified deep fakes as one of the most significant threats currently facing India, warning that they could create societal chaos. He also called on the media to play a role in educating people about this growing issue.

This situation underscores the importance of awareness, regulation, and technological safeguards in ensuring the responsible use of AI. It’s a reminder that while AI has the potential to greatly benefit society, it also poses significant challenges that need to be addressed.

Identify a deepfake video

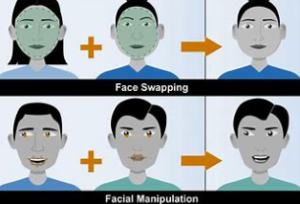

Detecting deepfakes, and artificial videos created with advanced technology can be a challenge. However, certain signs can help. Look for odd visual artifacts, inconsistent features, or unusual movements. Pay attention to biological signs like abnormal blinking or heartbeat. Check if the audio matches the speaker’s lip movements. Each person has unique speech patterns and gestures, so inconsistencies can be a clue. Deepfakes often display unnatural emotions and may have temporal inconsistencies, meaning the video doesn’t flow smoothly.

Analyzing both the visual and time-related features of a video can reveal abnormalities. Tools like Sensity can assist in detecting deepfakes. However, these techniques may not catch all deepfakes, so it’s crucial to think critically and verify the source when consuming media content.

Strategies to Mitigate the Risks

While the creation of deepfakes is a complex issue due to the accessibility and progression of AI technologies, several strategies can help reduce the risks:

- Enhancing Detection Technologies: Invest in the development of more advanced technologies to detect deepfakes.

- Strengthening Identity Verification Systems: Improve systems for verifying identity, including the use of biometric and liveness verification, to prevent deepfakes from being used in identity theft.

- Promoting Education and Awareness: Increase public awareness about the existence of deepfakes and the potential for their misuse.

- Implementing Regulation and Policy: Enact regulations and policies that prohibit the creation and distribution of malicious deepfakes.

- Tagging Content: Proactively tag content as real or fake when it is generated.

- Adopting Security Procedures: Implement robust security procedures, such as automatic checks in any process for disbursing funds.

While these measures can provide some protection, it’s crucial to remember that individuals also have a responsibility to verify the authenticity of the content they consume and share.